Whether you call them self-driving cars, autonomous vehicles, or even robo-cars, autonomous driving is a leading topic in automotive. The idea might conjure images of futuristic, slick, silver vehicles that resemble a spaceship more than a car you would see on the road today. But the opposite is more likely to be true.

Vehicles with varying levels of driving automation are on the road now—maybe even that unassuming minivan in the next lane—and the reality is that the automotive industry is in a period of transition, where advanced driver assistance systems (ADAS) are paving the way for fully autonomous vehicles.

ADAS First

The introduction of ADAS and active safety features equipped cars with abilities they did not have before, such as using sensors to perceive the external environment. For more than two decades, sensors have been used in applications like engine management; passive safety for closed-loop controls; or to react to events happening to the car, such as a hydroplaning wheel or a crash.

ADAS continually perceives and scans the external environment, which helps the vehicle not only to react to events but even anticipate problems. Sensors can widen and sharpen the driver’s view with features including surround view and night vision, and even prevent accidents through warnings such as lane-departure alerts and counter-act when necessary, such as applying an automated emergency brake.

Here are the technical steps and challenges in the progression from ADAS to autonomous driving.

Defining the Evolution of Autonomous Vehicles

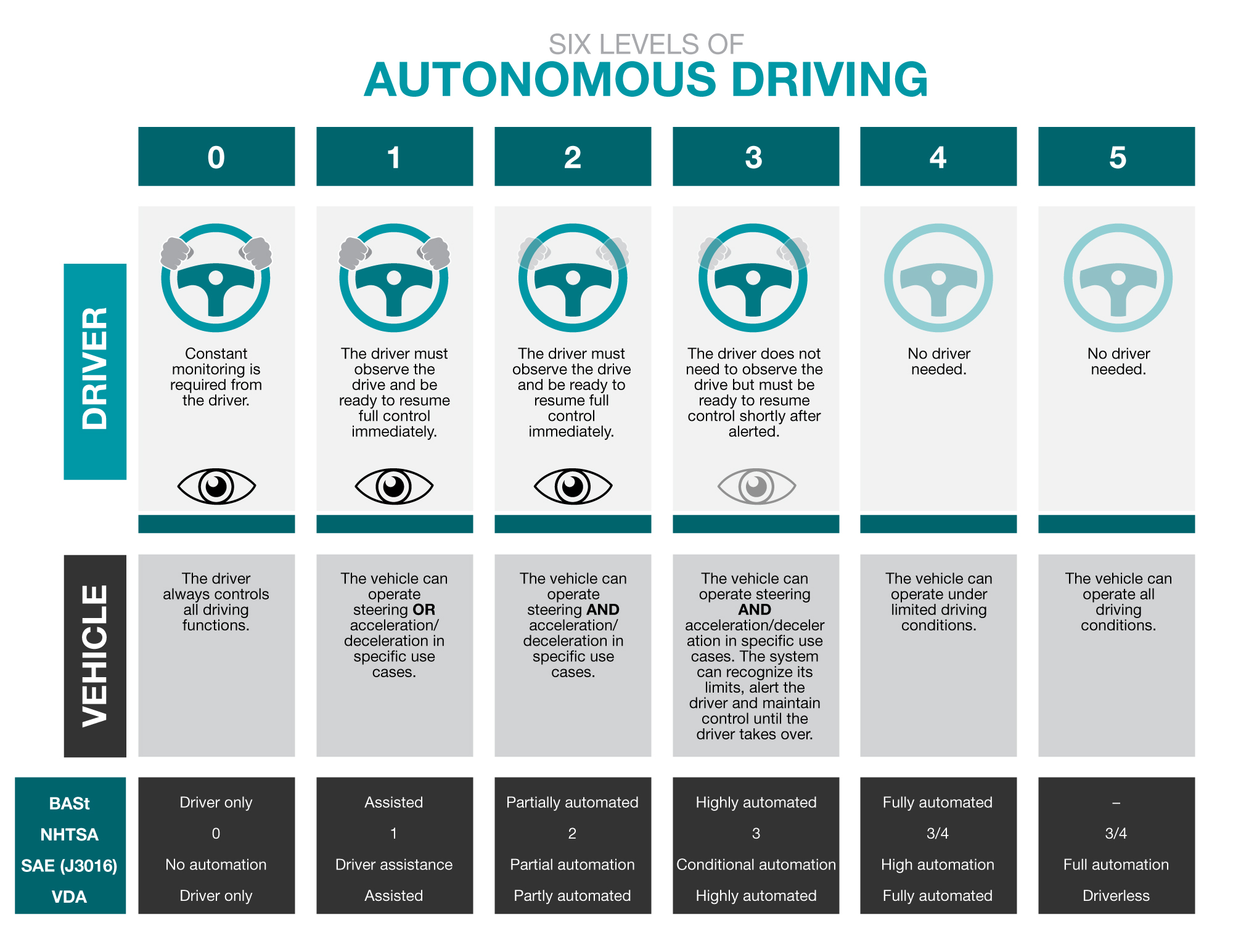

Multiple international and national standardization bodies have developed definitions for levels of autonomy, mapping out the steps from no assistance to fully autonomous driving. While charts like Figure 1 may suggest steady, incremental development, in reality, each milestone requires a disruptive change in system architecture, safety functionality, processing performance, or software.

Figure 1: Five levels of autonomous driving as defined by the Bundesanstalt für Straßenwesen (BASt), National Highway Traffic Safety Administration (NHTSA), Society of Automotive Engineers (SAE) International, and Verband der Automobilindustrie (VDA).

Level 0

Even without autonomous capabilities, Level 0 marks an important milestone, because it indicates the presence of sensors and electronics to improve driver performance. Informational ADAS provides surround-view and rear-view cameras, while machine vision can help identify dangerous situations and warn drivers with features such as a front camera with lane-departure warning, ultrasonic park assistance, and blind-spot detection radar.

System Topology

At Level 0, all systems can be independent and perform their tasks without significant data transfer between them. Slow-speed data interfaces like Controller Area Network (CAN) are usually sufficient. Video for informational ADAS is only displayed for the driver but not used for machine vision.

Processing

All data processing and algorithms for machine vision are performed on the edge (at the sensor’s location) or in a dedicated electronic control unit (ECU) with application processors selected for the necessary performance.

Safety

Given that drivers must observe their environment and stay in control of the car at all times, the loss of ADAS functionality would not lead to immediate danger. The systems’ erratic behavior and false alarms should still be avoided, as they can distract drivers and lead to undesired reactions.

Synergy

Because the systems operate independently, no real synergy occurs between them.

Levels 0 to 1

The car can assist the driver under certain conditions, either steering with lane-keep assist or decelerating/accelerating through autonomous emergency braking and adaptive cruise control.

System Topology

The various sensor systems can still be independent, with completely local processing and decision-making, but they will need to connect to other ECUs in the car in order to issue commands for acceleration and steering. The amount of data is still small and does not require more than CAN interfaces, for example. One sensor type is often sufficient to perform a given Level 1 function.

Processing

On the edge with little to no influence from other systems. Algorithms become more complex because they control certain driving functions.

Safety

Safety requirements increase because the ADAS can perform driving operations without the driver initiating or controlling them. Incorrect operation may now lead to dangerous situations or accidents.

Synergy

No significant difference from Level 0: systems operate independently without sharing information or data.

Levels 1 to 2

By performing lateral as well as longitudinal control, the car can perform more complex driving maneuvers. These are still considered assistance functions; drivers must continue to monitor the situation at all times and be ready to resume control immediately.

System Topology

A coordinated effort between different sensors and ECUs makes data connections between all ECUs necessary, as well as a central entity that makes the final decision and issues control commands. The data shared is typically object data and does not need high-bandwidth interfaces.

Processing

Image processing and object identification can still occur at the sensor’s location, but a single entity combines the data from the different sources and makes decisions. Optimized processor technologies typically handle the different processing tasks.

Safety

Safety requirements at the sensor modules remain the same or can be slightly lower than in the previous level as driving decisions or control of driving functions are not made in the sensor module. The central decision-making unit has higher safety requirements because of the control it has over the car. In fault or unexpected conditions, the system is designed to transfer control back to the driver immediately.

Synergy

Using data from different sensors allows more robust decision-making and helps overcome the shortcomings of individual sensor types. As long as processing occurs at the sensor location, only a limited set of data is available at the central decision-making unit.

Levels 2 to 3

With the vehicle performing steering and acceleration autonomously in certain instances, drivers can divert their attention away from the immediate driving task. This level has significant implications for hardware and software because the driver is warned and given a window of time before resuming control of the vehicle.

System Topology

With the number and types of sensors increasing, a different system topology becomes viable. Sensor modules without local processing are smaller, require less power and cost less. Because the sensor data is not processed at the sensor location, high-bandwidth, low-latency interfaces become necessary.

Processing

With raw data coming from sensors, the central ECU must have sufficient performance and optimized processors to handle image processing, object identification and classification, sensor fusion, and decision-making.

Safety

Requirements increase again at this level with so much of the processing moving into the central ECU, as well as the fact that the system can’t immediately return control to the driver. Some sensor or processing functions need to maintain functionality even under fault conditions to safely hand control back to the driver in a defined time frame.

Synergy

Most or all sensor data goes a central location without data loss or alteration from pre-processing in the sensor modules, enabling a maximum of synergies during the process of sensor data fusion.

Levels 3 to 4/5

At Level 4, the driver is not required to drive; think of a robo-taxi maneuvering a designated course. Level 5 achieves fully autonomous driving. In addition to perceiving its environment through sensors and deciding driving maneuvers, the vehicle must now also perform complete mission planning. It needs to know where it is, where it needs to go to and how to best reach its destination. Levels 4 and 5 best resemble what is often called “autopilot” in movies, like the futuristic robo-car.

System Topology

In addition to the sensors needed to perceive its immediate surroundings, Levels 4 and 5 require a way to determine the car’s current location with precision GPS, along a map to identify the best route and the ability to connect to other sources of real-time information through 4G or 5G, WiFi, and/or V2X, a car’s connection to the outside world. Most sensors will focus on high-resolution data acquisition and/or the processing of that data, while mission planning and decision-making occur in a central location. Mission-critical functions must operate even under fault conditions.

Processing

In addition to handling all driving functions, the vehicle now handles route planning and execution as well.

Safety

Without a driver to take over (there might not even be a steering wheel), the vehicle must safely operate under all road and weather conditions as well as during faults in the system itself. Fail-safe is not sufficient anymore; reliable operation during a failure is needed for mission-critical functions.

Synergy

In addition to the data from its own sensors, the system also connects to external data from a GPS, various cloud-based services through 4G or 5G, or other vehicles and infrastructure (WiFi/V2X).

Deployment and Integration of Autonomous Vehicles

While we will see more cars with ADAS and autonomous functions on the road, achieving a higher level of autonomy does not obsolete or replace vehicles with lower levels. The lifetime of a car (which can be 15 years or more) as well as the fleet composition of car manufacturers will lead to a mix of several levels of autonomy on the street as well as in production.

The adoption and implementation of a new system topology will enable OEMs to build lower-level autonomous systems in a similar manner as the premium systems.

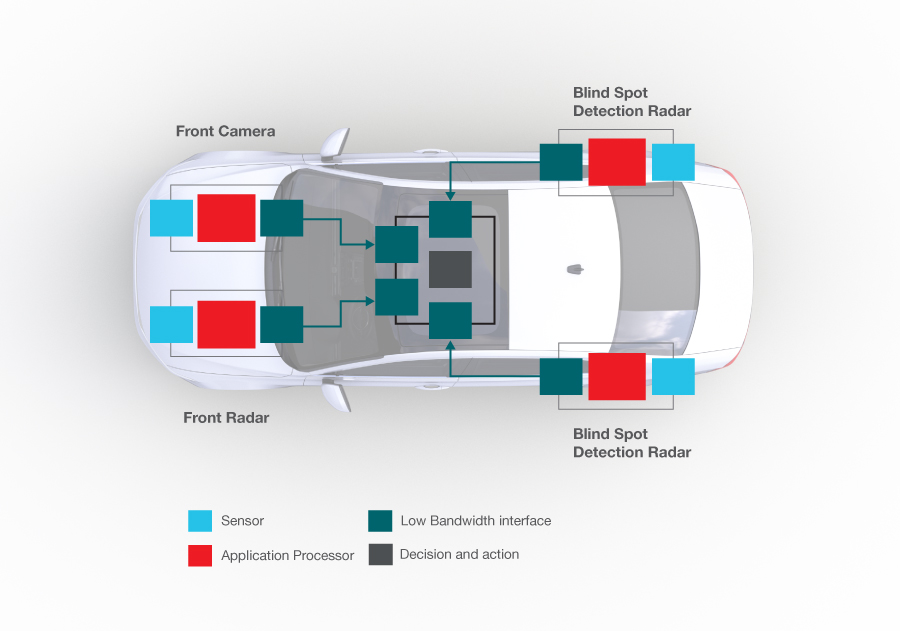

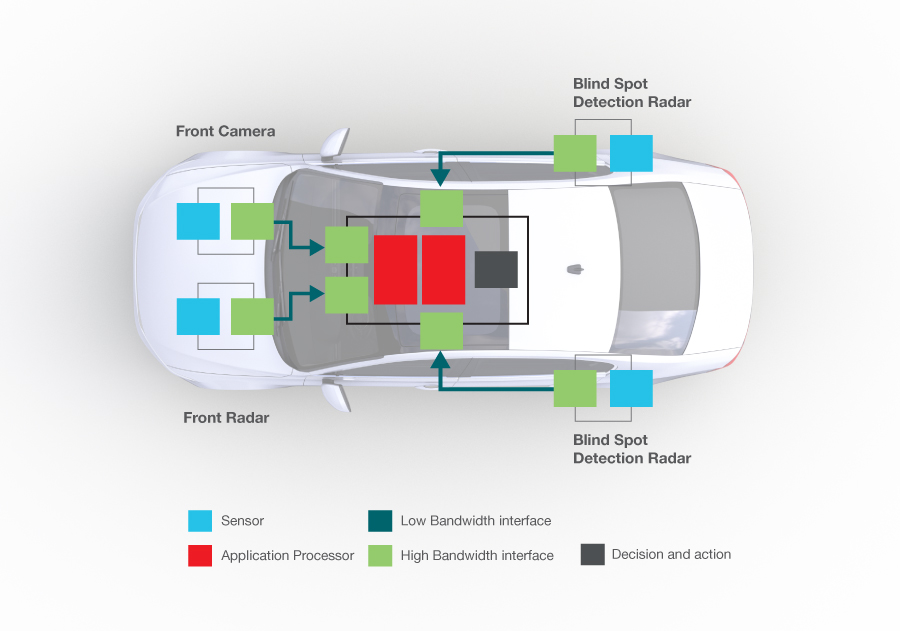

Figures 2 and 3 show a combination of ADAS functions in two different topologies. Both functions behave similarly from the driver’s point of view, but they use very different approaches.

The overall market strategy of vehicle manufacturers has a significant influence on how to approach and advance ADAS and autonomous driving. For some, the main goal could be to achieve 5-star crash ratings and provide multiple assist and automated assistance functions; others might take a direct route to offering only Level 4 or 5 driverless vehicles. The first strategy builds on a car’s existing characteristics, like a designated front and back and operational equipment such as a steering wheel. However, designing straight for autonomous vehicles doesn’t have to follow the same conventions usually associated with designing a car.

Figure 2: Combining existing systems with a central decision-maker.

Figure 3: A centralized processing and decision-making system connected to sensor modules with no local processing.

Some higher levels of autonomy can require disruptions in certain system aspects, compared to what is commonly used today. Just adding more of the same type of sensor modules and using the same system topology does not get you from Level 0 to Level 4 or 5.