As part of the internet, “the cloud” core principles have significantly changed how software is developed and deployed. The principles of virtual machines and containers have impacted 5G’s system architecture. Here’s how.

The concept of cloud computing has been around for decades, dating back as early as the 1950s where multiple users could execute tasks on computers rather than on simple terminals. Similar to Google’s Chromebook nowadays or many apps on our smartphones, devices only work if they have internet access, as the apps are only the interface to the service running elsewhere. In the 1950s through the 1970s, the split between the terminal and the “cloud” always occurred within the same building or campus, with few cloud resources for compute, storage, or memory available to terminals. Those years lacked the internet as we know it, which connects terminals and clouds beyond a single building and allows them to reach any cloud from anywhere.

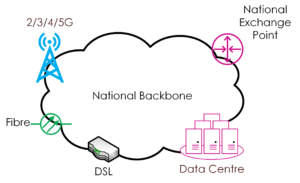

Today, the internet connects a range of individual computer networks such as DSL or 5G using a global backbone established between continents and national exchange points in each country. Figure 1 illustrates this mix of individual computer networks with a national grid and exchange points. In addition, data centers can be seen as a computer network, where internet services are located and offered to clients connected to the national backbone over an access network such as 5G, DSL or fiber.

Data centers operated by third-party organizations offer the ability to host services such as an email server, website, or chat server. Establishing the correct interconnection between clients and services is enabled by a set of standardized communication protocols that let endpoints such as clients and data centers allow information to be exchanged between endpoints, e.g. clients and data centers. Internet protocol (IP) is the common denominator in these exchanges that lets the other endpoint be addressed and routes packets through the internet. The resulting IP protocol is a range of protocols on top of IP that ensure reliable packet transmission (if desired) and lets applications exchange user data, such as IP+TCP+HTTP for the web or IP+TCP+IMAP/POP3 for emails. Most importantly, any client can theoretically reach any service on the internet beyond its current country borders.

Based on the extraordinary increase in services available online since the introduction of 3G and internet-enabled handheld devices, data centers require technologies that can scale services based on demand, while keeping individual services separate. The ability to separate the compute, storage, and networking resources within a data center dates to the 1970s, where Virtual Machines (VMs) isolated applications from each other to utilize hardware more efficiently.

VMs are no different than ordinary computers with hardware, an operating system, and applications. The only — but important — difference is the virtualization of hardware through a special software layer that lets more than one VM run on the actual hardware. For instance, if the hardware offers eight CPUs to execute software, a VM’s virtualization layer makes them believe they have sole access to all eight CPUs. Any other VM on the same hardware would perceive the same available hardware. Thus, it is possible to overprovision cloud resources (CPU and networking), assuming no single VM requires all its assigned compute capabilities continuously at 100%. If they do, the virtualization layer would schedule the available resource based on predefined service level agreements or set of scheduling policies.

The concept of VMs also lets the same services be deployed twice for redundancy and/or load-balancing purposes. Simply placing a service into a VM and deploying it with sufficient redundancy to scale with the incoming load of clients requesting the service is, however, not viable. The cost of running and operating VMs is not a flexible and dynamic operation, especially when based on the estimation of how much traffic might arrive. In addition, the deployment of a new VM might take several minutes, based on its size and complexity in integrating it into the data center logic to route packets from clients to an individual VM.

As a result, container technologies, e.g. Docker or Linux Container, were introduced in the late 2000s, allowing an application to be placed into a much lighter environment that can be deployed within seconds at the most. With the ability to bring applications up and down in the blink of an eye, we experienced another cloud evolution around how to write software targeting container environments.

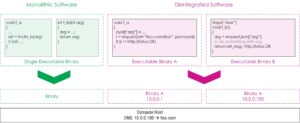

The software design pattern of a service that can scale disintegrates the application into smaller pieces of software components that communicate with each other over the network. Such “disintegration” is illustrated in Figure 2, which shows a monolithic software design on the left (in green) and a disintegrated software design on the right (in magenta).

The monolithic software example is composed of two functions, f_a and f_b, where Function f_a calls f_b with Argument arg. Function f_b then processes the given argument and returns a result. The code is compiled into a single binary against the architecture of the compute host. In contrast, the same logic is implemented as separate binaries on the right. Each binary is then placed into a container (e.g. Docker or Linux Container), resulting in both containers seeing their local networking interface with a different IP address and no other files on the local filesystem than the required binary and libraries (if any). As a result, instead of calling a different function directly as part of the binary, an HTTP request message is used in Function f_a to send argument arg to the URL foo.com/bar with the argument as the payload.

In the example here, JavaScript Object Notation (JSON) serves as the payload’s syntax. In the example below, the payload would look as follows: {“arg”: “”}, where “” can be anything a string of characters can hold. In Function f_a, the request() function comes from an HTTP library that constructs the packet and would run a Domain Name Server (DNS) query asking for the IP address for “foo.com”. In the example below, the DNS returns the IP address 10.0.0.100, which identifies the container of Function f_b. So if the request() function in f_a constructs the HTTP packet and sends it off to 10.0.0.100, f_b will receive it. Note that the HTTP library used in this example takes over the task of implementing an HTTP server and the parsing of incoming messages. Thus, all Function f_b must do is gain access to the Argument arg in the incoming request. As HTTP is based on a request/response semantic, Function f_b must issue a return message indicating if the information was processed correctly; in this case it sends an “OK” back to the sender.

An example of the abstract software above is a database that manages stateful information, e.g. username and password, and websites to implement the front-end part to visualize the stateful data. These two components reside in two separate containers, and the front-end container can now be deployed multiple times within the data center to scale with the number of users. The design patterns that disintegrate are often specific to an application and require a careful assessment of which parts should stay in a single container and which parts can be separated.

When looking at the core network of a mobile telecommunication system, the cloud principles described above have significantly impacted the architectural design of the 5G core network. Similar to any generation of mobile networks, the core is always chartered with the management of mobile devices — the User Equipment (UE) — such as attachment to a 4G/5G network, roaming when abroad, or billing for the calls and data users consume.

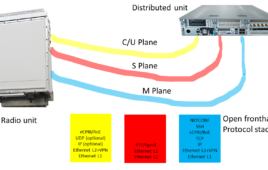

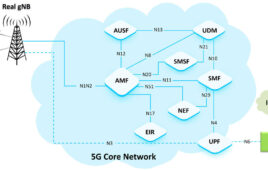

The core of a mobile telecommunication network can be compared with a service on the internet, though instead of a website, the core offers a service to manage devices. When comparing 4G to 5G Cores, the defining architecture has significantly changed, as illustrated in Figure 3. Represented in green boxes to the left, the 4G Core has been disintegrated into many more functions, each of which serve a much smaller purpose, represented by the blue boxes to the right. Furthermore, the communication between them has been unified and HTTP with JSON-encoded payload has been defined, following the realization of interfaces between container applications in the cloud.

The idea behind this effort is to increase the flexibility in what functionality, or blue box, is required in a specific deployment and allow operators to deploy a 5G Core composed of functions from multiple vendors. The architecture displayed on the right in Fig. 3 is declared as a service-based architecture, demonstrating the “service-first” proposition when defining and standardizing the 5G core network functions and their interfaces.

While 4G Core functions were often deployed as VMs, the 5G Core utilizes container technologies with many instances of the same function to distribute the load of managing UEs and deal with demand scenarios such as events where suddenly many people are in the same area using their 5G-enabled handheld devices.

5G has adopted several cloud principles to design its core functions to manage devices. This marks an evolution of the 5G core network in its architectural foundations, similar to how the cloud has changed the way services are provisioned. 5G is just the starting point for the capabilities a service-based architecture can bring to users, even beyond the higher throughput and lower latency features frequently promoted. The degree of customization of core networks will be pushed to new limits in future generations, with a much larger penetration of mobile technologies across different types of devices and broader internet access capabilities for the public.

Sebastian Robitzsch is a Member of Technical Staff at InterDigital Europe Ltd and leads the Smart Systems and Services Proof-of-Concepting team at the Future Wireless Europe lab. His current focus is on beyond 5G architecture innovations in the area of flexible mobile telecommunication systems towards a truly end-to-end Service-Based Architecture. In the past he has been with Dublin City University, T-Systems, Fraunhofer FOKUS, and Nokia Research Centre. Sebastian received his Ph.D. from University College Dublin, Ireland, in 2013 and an M.Sc. equivalent (Dipl.-Ing. (FH)) from the University of Applied Sciences Merseburg, Germany in 2008.

Sebastian Robitzsch is a Member of Technical Staff at InterDigital Europe Ltd and leads the Smart Systems and Services Proof-of-Concepting team at the Future Wireless Europe lab. His current focus is on beyond 5G architecture innovations in the area of flexible mobile telecommunication systems towards a truly end-to-end Service-Based Architecture. In the past he has been with Dublin City University, T-Systems, Fraunhofer FOKUS, and Nokia Research Centre. Sebastian received his Ph.D. from University College Dublin, Ireland, in 2013 and an M.Sc. equivalent (Dipl.-Ing. (FH)) from the University of Applied Sciences Merseburg, Germany in 2008.

Tell Us What You Think!