With militaries expanding their arsenals to include autonomous weapon systems and artificially intelligent armed robots, humanity must deal with some difficult questions about what these powerful technologies mean for the future of warfare, said former Army Ranger and Pentagon official Paul Scharre at Stanford on Tuesday.

“As we move forward with this technology, a challenge not just for the U.S. but for others is to figure out how to use it in more precise and humane ways and not lose our humanity in the process,” said Scharre at the annual Drell Lecture, an event organized by Stanford’s Center for International Security and Cooperation (CISAC) to address a current and critical national or international security issue.

“One of the ways we are seeing warfare evolve is people being pushed back from the edge of the battlefield – not just physically but also cognitively – as more and more decisions are being made by these systems operating at machine speed,” said Scharre, who is currently a senior fellow and director of the Technology and National Security Program at the Center for a New American Security, a nonpartisan defense and national security think tank based in Washington, D.C. “How comfortable do we feel about machines making life-and-death decisions for us?” he asked.

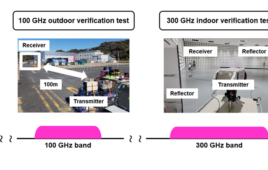

Former Army Ranger and Pentagon official Paul Scharre discusses the ethics of autonomous weapons and the future of war at the annual Drell Lecture on Tuesday. (Image credit: L.A. Cicero)

Rules of war

Scharre reflected on his experiences as a special operations reconnaissance team leader in the Army’s 3rd Ranger Battalion. He completed multiple tours in Iraq and Afghanistan.

He recounted one mission that sent him to the Afghanistan-Pakistan border to look for Taliban fighters. There, his team spotted a little girl – maybe 5 or 6 years old – with a herd of goats circling their position. They soon realized the animals were a ruse and the child was, in fact, reporting their location to Taliban officials by radio. Rules of war would have labeled her an enemy combatant that would have allowed Scharre’s team to shoot.

But for Scharre and his team, shooting the child was not an option; it would have been the wrong thing to do, he said. But if an artificially intelligent robot had been in Scharre’s place instead, he believes the outcome would have been different. Had the robot been programmed to comply with the rules of war, the little girl would have been fired at, he said.

Scharre said this raised a question: “How would you design a robot to know the difference between what is legal and what is right? And how would you even begin to write down those rules ahead of time? What if you didn’t have a human there, to interpret these, to bring that whole set of human values to those decisions?”

Following Scharre’s remarks, a conversation about the ethics of autonomous weapons and the future of war continued with Radha Plumb, head of product policy research at Facebook, and Jeremy Weinstein, a Stanford political scientist and a senior fellow at the Freeman Spogli Institute for International Studies (FSI).

Their discussion raised some of the legal challenges to establishing policies about deploying these emerging new weapons.

“There is an important asymmetry between humans and machines in the rules of war, which is that humans are legal agents and machines are not,” said Scharre, who worked from 2008 to 2013 in the Office of the Secretary of Defense where he led the working group that drafted the directive establishing the Defense Department’s policies on autonomy in weapon systems. “An autonomous weapon is no more a legal agent than an M16 rifle is. And humans are bound to the rules of war and humans must comply with that,” he said.

But there is still no clear agreement about what degree of involvement humans should have with these decisions to ensure their actions are legally binding, Scharre said. While Scharre acknowledged that there is a “shred of consensus” that humans at some level should be involved in these decisions when deploying these technologies – some countries say there must be “meaningful human control” and the Defense Department asks for “appropriate human judgment” – there is no working definition for these terms in practice.

“But it does imply to some level, humans ought to be involved in these decisions,” Scharre said. “And that’s an important decision all of us need to unpack, not just military experts working in this space or technologists but also legal and ethical experts trying to understand what is that human involvement and what should it look like moving forward.”

An interdisciplinary conversation

Scharre said it is not just the military space that has to reckon with how humanity ought to use technology that could replace or augment human decision-making. He emphasized it is an interdisciplinary conversation: “We need not just technologists but also lawyers, ethicists and social scientists, sociologists to be part of that.”

Colin Kahl, who is a co-director at CISAC and the Steven C. Házy Senior Fellow, delivered concluding remarks. He noted that one of the top priorities for the university is to make sure students are equipped with the analytical and ethical tools needed to consider the full implications of current and future technologies. Included in that effort, he said, is a new partnership between CISAC and the Stanford Institute for Human-Centered Artificial Intelligence that will focus “on making sure that social, economic and ethical questions related to AI are front and center as these tools evolve.”

The Drell Lecture is named for the late Sidney Drell, a theoretical physicist and arms control expert who was a co-founder of CISAC and former deputy director of the SLAC National Accelerator Laboratory.