In an ordinary digital camera, a large amount of information is collected by the camera’s sensor. This information is immediately processed by compression software, and a much smaller amount is then stored. This process takes time, contributing largely to what is called “shutter lag.” Is it possible to collect only what is needed and thus reduce or even eliminate shutter lag? Thanks to some clever physics and compressed sensing, the answer is “yes.”

The mathematical concepts underlying compressed or compressive sensing date back to the 1970s, when the merits of the ℓ1ℓ1 norm were recognized in convex programming. The theory developed rapidly in the 1990s, and a breakthrough occurred in the early years of the 21st century with the work of Candès, Tao, Romberg, and Donoho. Collectively, they realized that one can design an efficient sensing or sampling protocol by capturing useful information in a sparse signal and compressing it into a small amount of data.

These beautiful mathematical ideas have found an application in the world’s fastest 2-D camera. By adding a digital micromirror device for spatial encoding and applying reconstruction algorithms from compressed sensing, Lihong Wang and colleagues at Washington University in St. Louis transformed a traditional one-dimensional streak camera into a 2-D ultrafast imaging camera. Now, for the first time, humans can see the movement of light on the fly (see Figure 1).

Figure 1. Still frames from movies captured with compressed ultrafast photography (CUP)shows a light pulse (a) bouncing off a mirror and (b) refracting at an air-resin interface.

Compressed Sensing

Compressed sensing is based on the observation that most signals or images contain only a small amount of crucial information. By strategically discarding unnecessary data, one can reduce the image’s size while retaining sufficient information to faithfully reconstruct the original. The full image is then reconstructed from the encoded data using linear programming algorithms.

To make this possible, compressed sensing relies on two principles: sparsity and incoherence. Sparsity implies that the image has a concise representation when expressed in the proper basis, while incoherence indicates that unlike the image of interest, the sampling/sensing waveforms have an extremely dense representation in the sparsifying basis. Thus, compressed sensing is a simple and efficient signal acquisition protocol that samples—in a signal-independent fashion—at a low rate and later uses computational power for reconstruction from what appears to be an incomplete set of measurements.

Instead of collecting all of the information about a signal or image in pixels in a large nn-dimensional vector xx, compressed sensing collects information in a much smaller kk-dimensional vector y=Axy=Ax. Here, AA is a (rectangular) matrix that represents the measurement process. If the signal is sparse, kk need not be very large to recover the original signal xx from yy; in practice, it usually needs to be no more than four times the sparsity level of xx.

The underdetermined system Ax=yAx=y can be solved in a remarkably efficient way by minimizing the ℓ1ℓ1 norm ∥x∥1=∑ni=1|xi|∥x∥1=∑i=1n|xi| of xx, subject to the constraint y=Axy=Ax. This convex problem can be converted to a linear programming problem, for which we have fast algorithms. When the signal is sufficiently sparse, the recovery via ℓ1ℓ1 minimization is provably exact.

Signals and images are rarely exactly sparse; more often they are only approximately sparse. Additionally, measured data are invariably corrupted by at minimum a small amount of noise; at the very least, small perturbations in the data should cause small perturbations in the reconstruction. So for practical reasons, compressed sensing must be able to deal with both nearly sparse signals and with noise. As was shown by Candès, Romberg, and Tao, if xx is not exactly sparse, then the quality of the recovered signal is as good as if one knew the location of the largest values of xx ahead of time and decided to measure those directly. In other words, the reconstruction is nearly as good as that provided by an oracle which, with full and perfect knowledge about xx, extracts the most significant pieces of information.

The 2-D Camera

The 2-D camera, which can record non-repetitive, time-evolving events at one hundred billion frames per second, was developed by Wang and his colleagues using a dynamic 2-D imaging technique called compressed ultrafast photography (CUP). CUP combines streak photography, an ultrafast imaging technique, with compressed sensing.

While traditional cameras capture discrete images by repeated opening and closing of a shutter, streak cameras deflect incoming light in a way that maps time onto a spatial dimension on the camera’s two-dimensional pixel array. The light is deflected by an amount proportional to its time of arrival, a process known as shearing.

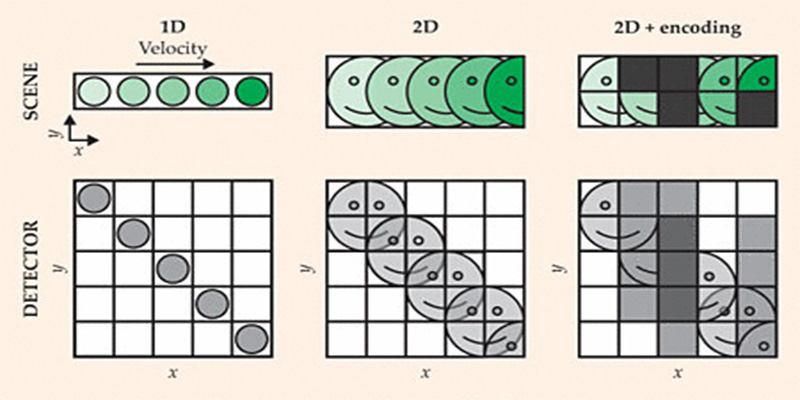

Traditional streak cameras can film in only one spatial dimension. When a one-dimensional scene like the one shown in Figure 2—where the object moves from left to right—is sheared vertically, the scene’s time domain is translated to the detector’s yy-domain. A movie can then be reconstructed by taking each row of pixels as a frame.

Figure 2. A one-dimensional scene is sheared vertically and the scene’s time domain maps to the detector’s y-domain. A movie can be reconstructed by taking each row of pixels as a frame.

By masking the 2-D scene with a known pixel pattern, Wang’s group recorded a 2-D scene in a single shot. The Washington University team was able to achieve this by taking their cues from signal processing. CUP facilitates detection of information about all three dimensions—spatial coordinates xx and yy, and time tt—on a single pixel array by encoding the input scene in the spatial domain. The resultant image is then sheared in the streak camera. A 2-D detector array with a single snapshot measures this encoded and sheared three-dimensional scene (x,y,t)(x,y,t). The encoded data can retrieve time information from the input scene in the subsequent image reconstruction.

Applications

The single-shot ultrafast camera is like a microscope for time, one that has many potential applications in fundamental and applied science. One remarkable medical application for the camera is in magnetic resonance imaging (MRI). MRI recovers images from a human body by taking a large number of measurements before reprocessing the data. The scan can take extended periods of time, much to the disadvantage of patients who have to be kept completely still. In the worst cases, the individual’s breathing is stopped, depriving the patient of oxygen for the entire scan. Compressed sensing techniques alleviate this issue by reconstructing the image using many fewer samples. These techniques significantly reduce the number of measurements required for the MRI from minutes to mere seconds.

CUP can potentially be coupled with microscopes to enable the observation of transient events in cell structures. For instance, it could be used to observe energy metabolism in cellular mitochondria. It could also enable understanding of light’s passage through tissues, which could yield important insights into therapies that use lasers to destroy abnormal or diseased tissue, with the objective of keeping healthy tissue unharmed.

As Wang puts it, the generic nature of the camera means it can be coupled with a wide variety of tools—from microscopes to telescopes—and thus be used to film anything from cellular functions to collapsing supernovae.