It’s always fun to peruse through the published statistics from analysts. Most of the trending ones are expecting, “Twenty-one billion devices connected by the year 2020,” from Gartner, but one that really caught my attention was a statistic from Cisco, “Sixty-three percent of internet traffic in 2017 was generated by WiFi connected clients.” This was the first time wireless devices surpassed wired devices in this statistic. It’s clear that not one market—residential, business, or Internet of Things (IoT)—is single handedly driving this adoption, rather it’s a combination.

Ultimately, this is leading more users to the perception that WiFi and the internet are synonyms, much to the dismay of broadband service providers around the world. There is an increasing number of support calls for service providers to troubleshoot WiFi connections for their subscribers, who claim “The internet is broken, so it must be your fault mister service provider.” As a result, WiFi improvements and reliability are high on service provider’s wish lists.

WiFi Testing Methods

Part of this problem’s solution lies in testing WiFi devices, especially home router equipment offered by service providers to customers. Lots of traditional testing has already been applied to WiFi devices, including measurements like total radiated power (TRP) and radiation pattern measurements, which have been used for device manufacturers to develop their antennas and case designs, trying to maximize the uniformity of power transmitted connected devices. Many developers also perform open air throughput testing, verifying bandwidth performance for a single user. However, as the new WiFi technologies gain adoption and deployment numbers, this testing doesn’t provide the required coverage to adequately predict how well a device will perform in the field.

To guide the development of testing for these new systems, it’s helpful to break down the requirements into a few topics:

- Range Testing: where the system’s (access point and station) performance is measured as a function of the distance (range or attenuation) between the two devices.

- Interference Testing: where the system is operated within the presence of other interfering signal (i.e. noise).

- Multi-device Testing: where the system must be operated with coordination between additional devices.

- Interoperability Testing: where the system must work with devices from many manufacturers.

Range Testing

Range testing tends to be the first “check mark” on many service provider’s set of device performance requirements. Simply put, WiFi devices should work “well” over a range of distances. In reality, this is actually far more complex. A key tenet for all testing should be repeatability. If the test (setup, design, or implementation) doesn’t allow a measurement to produce the same result over multiple runs, the test’s value is hugely lowered. For WiFi testing, this tenet really requires dedicated supportive infrastructure. Specifically, the equipment (including isolation chambers and controlled channels between the devices being tested) greatly improves repeatability for range and throughput testing. Ideally, the range testing should approximate the real-world environment where the system would be operating.

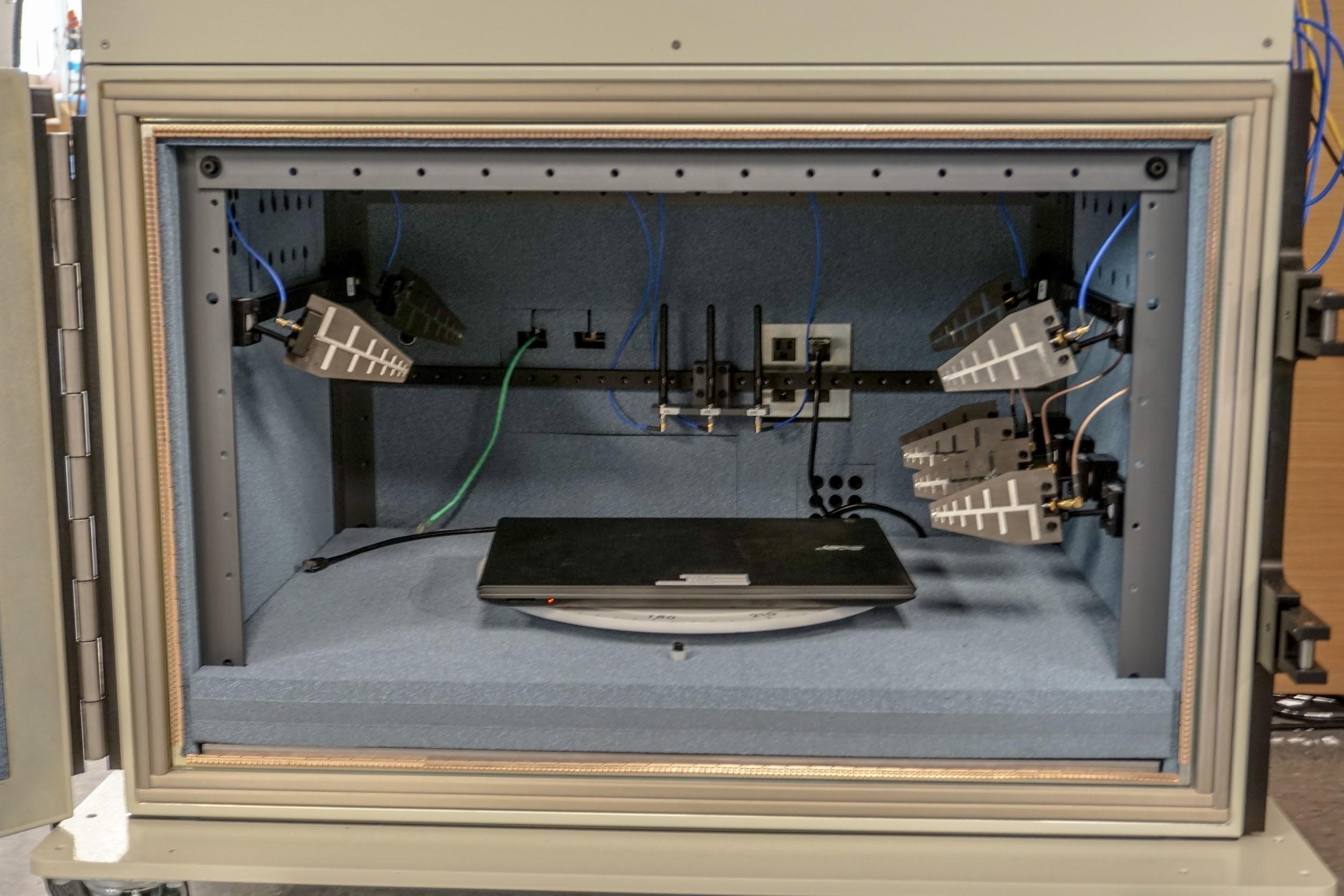

Two parts of this environment are the orientation between the access point and station—the multipath channel between devices. One solution for implementing orientation within the range testing is to use near field antennas to “couple” the device into the channel between devices. This way, the device can be “orientated” relative to the antennas for measuring any impacts on the system performance. Figure 1 shows an isolation test chamber equipped with a turntable and near field antennas for exactly this purpose. In this example, the controlled channel is a four stream multipath fading channel, where the attenuation of each path can be individually controlled.

Figure 1: Isolation test chamber equipped with a turntable and near field antennas.

WiFi systems adjust the bitrate by selecting the MCS (modulation coding scheme) used to encode and modulate transmitted data. The selected MSC rate must “fit” the current channel, or the receiver’s error rate will increase, potentially to the point where the forward error correction can’t recover the frame. This will cause the transmitter to resend the frame, after selecting a lower MCS index, which reduces the bitrate, but improves signal-to-noise ratio (SNR) at the receiver, theoretically lowering the error rate. Any complexity comes in the initial selection for the MSC used by the transmitters. The goal is to select the highest possible MSC the receiver can support for the given channel, thereby providing the fastest possible connection.

That connection also needs an appropriate level of reliability, or the large number of transmitted frames will require retransmission, adding increased latency and jitter to the communication stream carried by the wireless devices. Depending on the protocols used by the communications stream, the increased latency could decrease throughput observed by the user, such as a decrease in the TCP window size. Due to this interplay between the physical layer and higher layer protocols, it’s import to implement testing using actual protocols, as opposed to testing the layer two throughput only.

Interference Testing

Interference testing adds another dimension, injecting other radio signals into the communications channel. Those signals might be transmitted by other non-WiFi systems in the same spectrum, such as Zigbee, Bluetooth, microwave ovens, and some cordless phones (just to name a few). These non-signals would be referred to as “alien noise,” since the WiFi systems have no way to receive or interpret the signals. Injected alien noises at high enough levels will cause the receiver’s degradation of the SNR, to the point where no frames are demodulated without error. Fortunately, most of these alien signals use time-limited transmission or frequency hopping schemes, caused by intermittent receive failures on the WiFi systems. It’s also possible and important to test with the injected noise signals representative of other WiFi systems, often referred to as self-interference.

These signals can fall in two categories, where the channel of the noise signal exactly matches the WiFi channel of the systems being tested, or they (the channels) overlap, but are not an exact match. While the former case sounds like the most detrimental, the partial overlap case is worse. For the complete overlap WiFi systems can detect the noise signal and avoid transmission, while the “noise” signal is present, using built-in channel back-off algorithms. Both cases will occur in real-world deployments and should be tested in the controlled lab environment.

Multi-device Testing

Lastly, the real-world environment is also going to include a large number of devices from various manufacturers, containing a number of different WiFi technologies (or generations). It would be completely unreasonable expecting users to upgrade all WiFi devices in their network each time the newest standard is released by the IEEE. Fortunately, device and chipset manufacturers have always ensured support for the multiple generations of WiFi, making coexisting technologically possible. However, coexisting with older technologies does come with costs, in terms of airtime consumption when transmissions occur between an older system using a lower bitrate compared to newer systems at higher bitrates, where the same frame of data would take longer (use more airtime) using the older technology. For this reason, many systems attempt to implement proprietary mechanisms to ensure airtime fairness in these scenarios. The better these systems perform, the better the users’ perception of the wireless (or internet in most cases) performance will be. Testing with a mix of devices from various vendors will provide the best possible coverage for situations for likely to be encountered in real deployments.

Conclusion

One last key item missing from the WiFi space (until recently) has been an industry-wide plan for this type of performance testing. Without an agreed set of tests and metrics, device manufacturers were left to their own devices and claims, therefore service providers had to sort through all of that “data” and ultimately use it in their selection processes. This obviously led to gaps and missed expectations on device performance. Currently, the Broadband Forum is in the final stages of development for the WT-398 WiFi In-Premises Performance Test Plan. This represents the first time the industry has reached agreements on performance tests and their metrics, and will give service providers a powerful tool in evaluating WiFi devices from different manufacturers. Assuming service providers require devices to pass these requirements before deployment, WiFi should keep up with the internet demands for the next couple of years.