Connected factory applications in manufacturing equipment are creating complex new problems. These problems are both technical and economic in nature. On the technical side, there is a need for separation of mixed-criticality applications to achieve timing, security, and efficiency goals, while still communicating with the world outside the factory. On the economic side, manufacturers need to avoid risks associated with changes to long-standing existing software core algorithms. Altering these algorithms could be time-consuming and expensive, especially given the huge number of platforms that need to be upgraded concurrently to realize a true connected factory.

The most efficient way for industrial equipment designers to solve these problems is to adapt existing software tools from other industries. One software tool can help achieve the necessary separation while enabling manufacturers to leave existing core algorithms untouched. This is virtualization, which has been used extensively in enterprise and network systems for decades but is relatively new to embedded systems.

Virtualization is a widely used term that encompasses a range of technologies and can mean very different things in different contexts. A virtualized cloud system will take on vastly different tasks than a virtualized enterprise system and does things that are even further removed from what a virtualized embedded system does. Regardless, virtualization provides similar benefits: decreasing the number of physical resources needed and providing a way to segment networks, applications, or processes.

Although methods and end applications for the various types of virtualization differ, they do have one thing in common: taking a finite amount of physical hardware and making it act like multiple separate virtual environments by creating software-defined partitions inside the hardware. Virtualization can work at a network level, a server level, or a single platform level. It allows you to run multiple sessions for multiple people or tasks on the same server simultaneously (as cloud computing applications do), or to separate a single desktop into two virtual machines.

Although virtualization was originally developed to solve enterprise issues, its separation of tasks and extension of resources is valuable for many different situations. However, different virtualization schemes that were designed with different uses in mind will not all work equally within the constraints of embedded industrial systems, which include limited memory or limited power consumption caused by heat dissipation.

Embedded systems have different priorities than enterprise or data center server infrastructure systems. For many embedded systems, especially in industrial automation, meeting a deadline is the most essential part of the correct operation of the program; this determinism cannot be sacrificed to achieve other goals.

Software architectures, such as real-time operating systems (RTOSs) and bare-metal-based periodic control loops, are common and have been in place in industrial automation for years. Recent market trends are forcing industrial manufacturers to look at other software solutions in order to incorporate more non-real-time functionality around crucial real-time tasks in order to accomplish goals, such as cloud connectivity for uploading machine data for server-based predictive maintenance algorithms—a commonly discussed application around Industry 4.0 or the Industrial Internet of Things (IIoT). System designers need a solution to run these new IoT tasks without compromising or interfering with critical real-time tasks. Virtualization seems to be a promising path forward, but with the multiple types of virtualization solutions out there, deciding which one to use requires in-depth discussion and thought.

The Need for Virtualization in Industrial Embedded Systems

Predictive maintenance isn’t the only IIoT application pushing manufacturers to find virtualization solutions. Wired non-real-time networking and cloud connectivity both enable a large number of applications that apply to a diverse set of industrial automation applications.

A related application is remote monitoring or remote updating. In many industries—manufacturing, process automation, and oil and gas—equipment is often installed in hard-to-reach or hazardous environments where sending a human to check or update equipment is dangerous and expensive. A connected machine could instead have updates pushed to it remotely from a server.

An even more future-looking Industry 4.0 concept for connected machines is modeling an entire process in the cloud, such as an assembly line or a chemical reaction in pharmaceutical manufacturing, for in-depth optimization analysis, as shown in Figure 1. This model can also simulate changes to manufacturing flows before implementation. This type of modeling would require connectivity deployed across nearly the entire factory. Connectivity can be built into larger equipment, while it would be more cost-effective to have gateways aggregate information from smaller pieces of equipment.

Connectivity in industrial equipment will only be able to become this widespread when it becomes cost-effective. Although it sounds somewhat far off, leading industrial companies look like they are moving in this direction with the development of cloud platforms such as GE’s Predix and Siemens’ Mindsphere.

Figure 1: Model of an automation process viewed on a tablet.

All of these examples require cost-effective connectivity without compromising the machine’s primary mission. Although web or server connectivity applications could benefit from lower latency, real-time operation is in the “nice-to-have” category and cannot be prioritized over the time-sensitive automation tasks that an RTOS manages in most industrial automation equipment. A drive on a conveyor belt can have a delay in sending its maintenance data to a server without large consequences; it can’t miss implementing a new command from a PLC to change flow direction.

Many of the examples of new applications pushing changes in embedded industrial equipment center around networking. However, networks are not new in factory environments. Field buses and industrial Ethernet protocols like Profinet and EtherCAT have been present in factories for roughly two decades in some form. What is new is the exposure of these networks to the outside internet. As equipment becomes more connected to the world outside the factory due to cloud connectivity, the entire network inside the factory becomes more vulnerable to interference—either malicious or unintentional. Because of this, separation is necessary from a security perspective in addition to a timing perspective.

Virtualization Uses Beyond Networking

Virtualization has uses beyond the coexistence of a critical real-time application and less-time-critical networking. Think about taking a standard PLC, machine controller, or protection relay, and adding a more sophisticated, larger human machine interface (HMI) to enable easier interaction with operators, as shown in Figure 2. It would be simpler to enable this larger display panel with standardized graphics frameworks tuned for Linux than it would be to support it with an RTOS, especially when considering that the real customizers of the graphical user interface (GUI) are generally the equipment manufacturer’s end customers.

Figure 2: CNC machine with large HMI.

However, putting the whole system on Linux would not be a good choice for the critical functions of the equipment: the PLC logic, motion control, or protection algorithms. A solution is to run both tasks on a single central processing unit (CPU) while maintaining separation between the tasks and the OSs optimized for those tasks with a simple, lightweight hypervisor. However, picking the wrong virtualization method could add bloat, require more memory and lengthen development time.

Types of Virtualization and Suitability for Embedded Systems

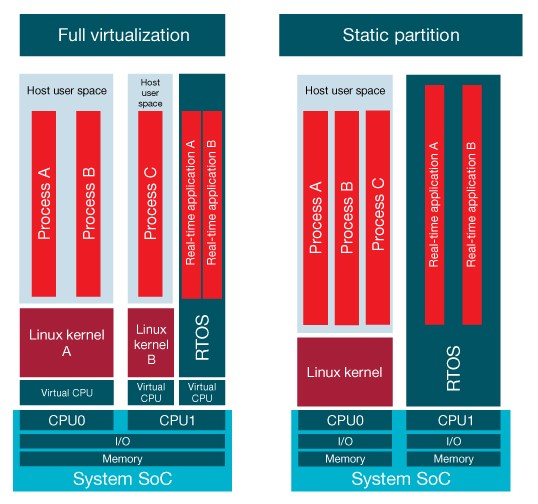

Virtualization can take two paths: full virtualization or static partitioning, also called core virtualization.

Full virtualization schemes simulate the hardware environment so that the software partitions creating virtual environments are not tied directly to the hardware partitions.

Static partitioning schemes isolate programs or tasks to certain portions of the existing hardware in order to simulate separate systems. The software partitions are bound to the hardware partitions, so you would have at most one OS per physical core, as shown in Figure 3. This type of virtualization requires no support for over commitment of resources like CPUs or random access memory (RAM).

Figure 3: Comparison of the two main types of virtualization.

Both schemes enable the separation of isolated tasks (such as sharing a system among multiple users) or the separation of critical tasks from less-critical tasks (such as separating a secure domain from a general-purpose domain). Full virtualization further enables you to suspend entire OSs to persistent storage or even do live migration from one physical processor to another over a network connection. Static partitioning trades off this flexibility for some guarantees of determinism.

Since different virtualization schemes relate to hardware in different ways, each naturally has advantages for certain applications. Full virtualization is powerful; it can enable a single server to act like hundreds of servers. It does have drawbacks, however, since multiple software instances may attempt to use the same finite set of hardware resources simultaneously. Software-managing a virtualization scheme can resolve resource conflicts, but there will be an impact on application latency.

Implementing Virtualization

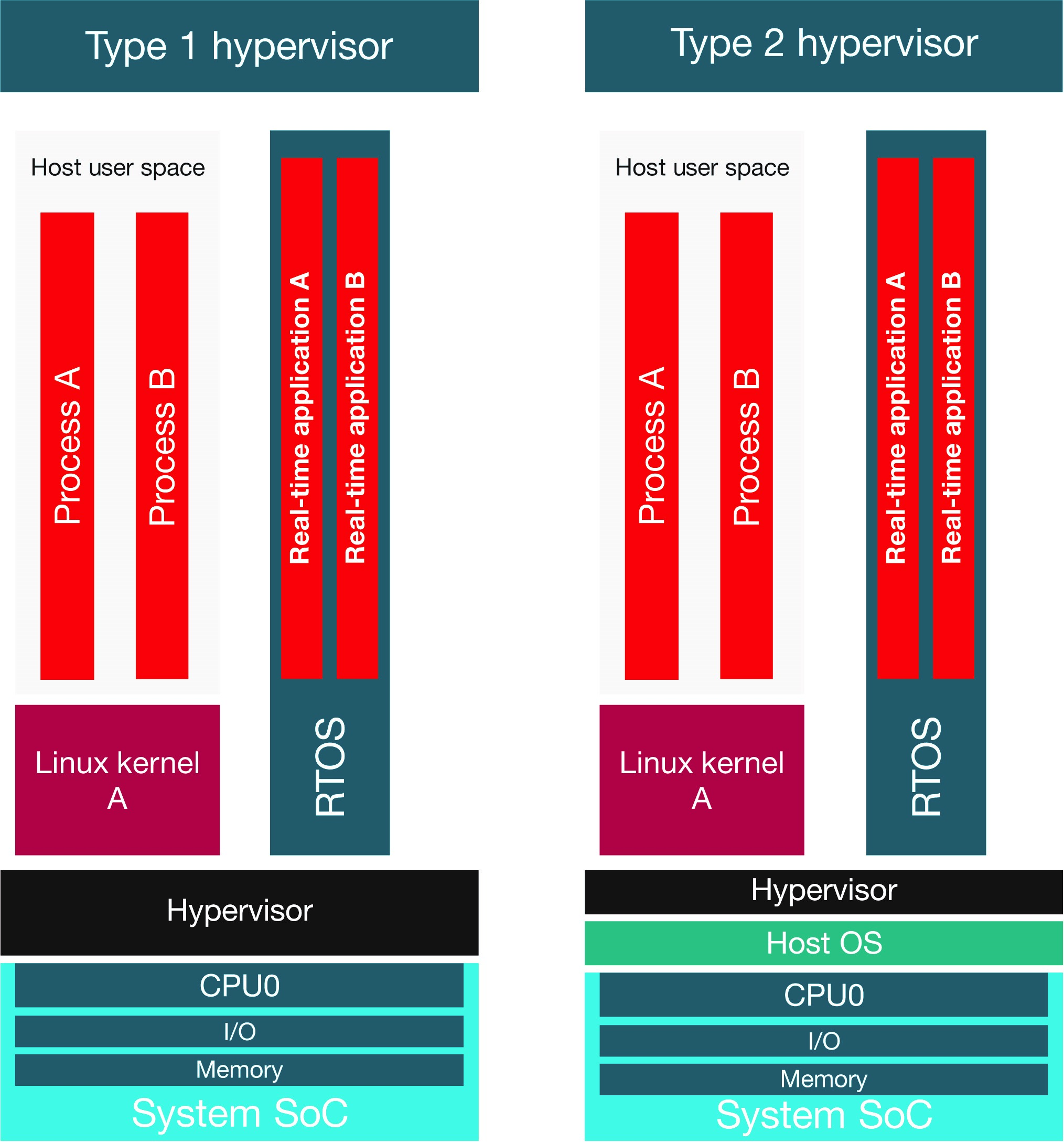

Both full virtualization and static partitioning require underlying software to create the virtualization scheme. This software is called a hypervisor. It runs in a privileged execution level and manages guest machines or guest OSs. The hypervisor also manages the sharing of actual physical resources by virtualized systems.

Much like the different levels of virtualization, there are also two different types of hypervisors: type 1 and type 2. A type 1 hypervisor is a dedicated layer of software running on the hardware. It hosts OSs and manages resource and memory allocation for the virtual machines.

A type 2 hypervisor is also known as guest OS virtualization. Here, there is an additional lower layer of software: a host OS that provides drivers and services for the hypervisor hosting virtualized guest OSs, as shown in Figure 4. The guest OSs are unaware that they are not running directly on the system hardware.

Figure 4: Comparison of the two types of hypervisors, Type 1 and Type 2.

A type 2 hypervisor acts as an abstraction layer. Guest OSs become processes of the host OS and depend on the host OS to access hardware resources. The advantage of type 2 hypervisors is that you don’t need to make changes to the host or guest OSs, but the drawback is that the layers of abstraction can decrease overall system performance.

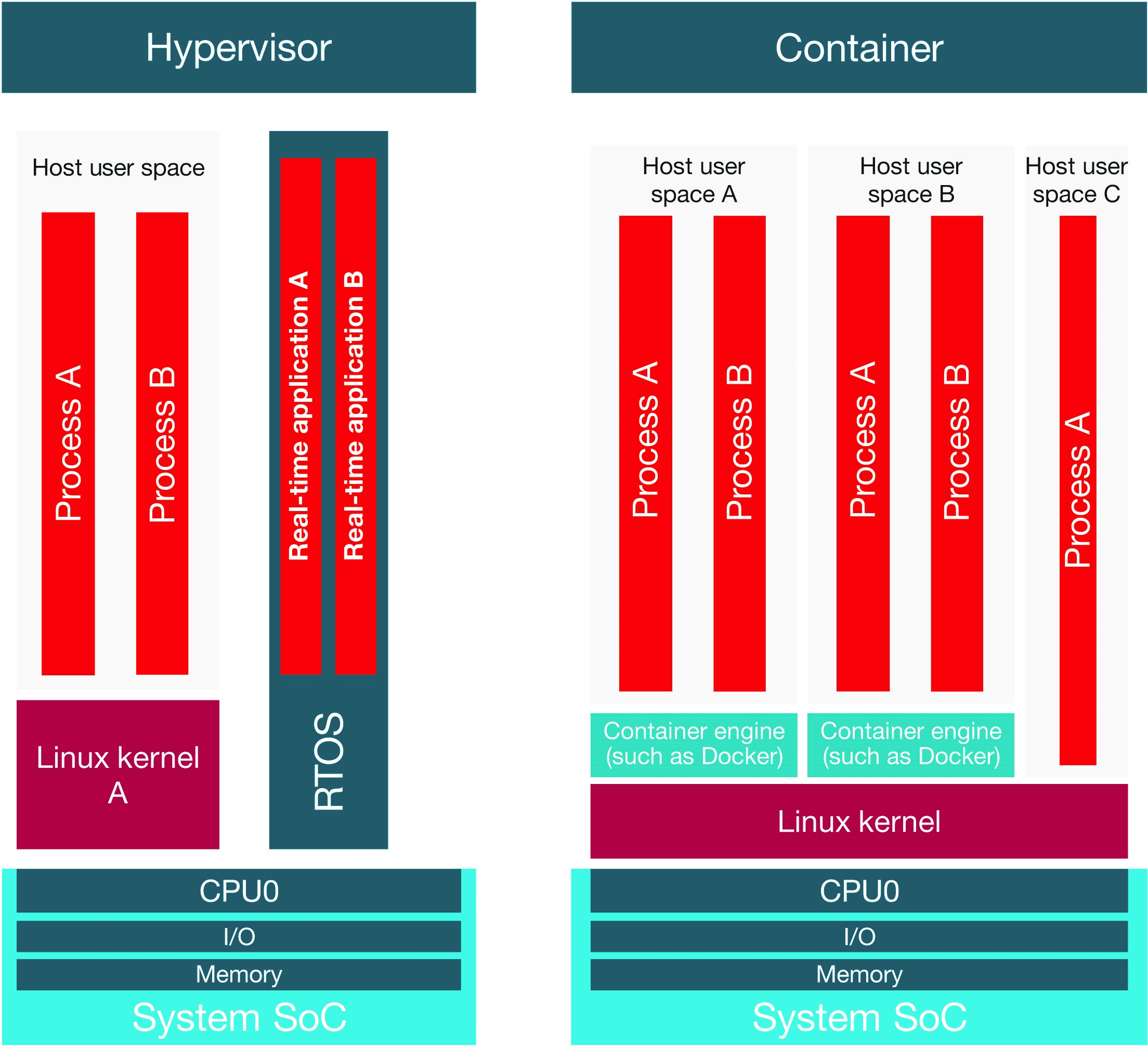

Aside from hypervisors, another popular software solution for application separation is containers. Where hypervisors virtualize hardware to run multiple OSs, containers virtualize OSs to run multiple applications, as shown in Figure 5. Containers are less resource-heavy and can be more application-dense, but their disadvantage is that all applications need to use the same OS.

Figure 5: Comparison of a container and a hypervisor running on a system on chip (SoC).

Although this separation of applications is useful in embedded industrial equipment, real-time and non-real-time tasks will be less able to coexist because the system can’t run both an RTOS and a high-level OS at the same time. Containers do not address the static partitioning and determinism topics, but you could use them in embedded systems for management of other high-level software stacks without real-time constraints on the Linux side of a partitioned system.

Virtualization Solutions

The need for virtualization will be increasing as applications that include cloud connectivity or demand easier interactivity between human operators and machines push manufacturers to include more non-real-time functions in real-time industrial equipment. Industrial manufacturers seeking a software strategy for virtualization will need to consider these questions when weighing their options: can they implement the solution quickly without investing a lot of time, R&D or software licensing fees; can they implement the solution on cost-effective hardware without requiring extensive memory; and most critically, can the solution prioritize real-time operation, which is the true goal of the equipment.