EE World interviewed John D’Ambrosia, who told a few stories from earlier times and gave a peek at what’s coming next from the IEEE 802.3 set of standards.

Ethernet is everywhere. Created in 1973, the IEEE 802.3 communications bus has taken on many forms, most of which consumers and IT people don’t see. The beauty of Ethernet is that, because of stringent technical standards, you plug it in and it just works. To make interoperability possible, engineers hammer out the specifications. Data rates started with 10 Gb/sec over copper, but today, we have speeds of 800 Gb/sec over fiber with many speeds and flavors in between.

Ethernet’s reach ranges from millimeters on chip-to-chip electrical links to tens of kilometers over optical links. Standards work doesn’t just focus on the highest attainable speed, for intermediate speeds are always in the works. For example, today’s work includes speeds of 800 800 Gb/sec and 200 800 Gb/sec. Why? Because not everyone needs the highest speeds nor the cost of equipment capable of handling it.

Where is Ethernet heading today. To find out, EE World spoke with IEEE 802.3 Task Force Chair John D’Ambrosia, who has worked on Ethernet design and standards since the late 1980s.

EE World: Upon hearing that 2023 was the 50th anniversary of Ethernet, I immediately thought of you, John. I didn’t want to ask you about the beginnings of Ethernet. Instead, please take us through how you’ve seen things progress in the time that you’ve been involved?

D’Ambrosia: So that’s kind of a loaded question because I joined the workforce as a summer intern in May of 1988. I was working at Amp, the connector company, which had a fiber-optic group. I got pulled into testing fiber-optic data links; I later found out the data that I was generating was being brought into the eye chip leaf and I was just thinking, I wish I could go find those reports. Because I’m sure I wasn’t given any credit. I was just one of the test monkeys in the back. But it’s amazing to me how it goes back to there, right? I mean, right from the very beginning.

While at Amp, I was involved with semiconductor companies, and we started doing work with them. That eventually led to getting involved with the 10 Gigabit Attachment Unit Interface (XAUI). I remember the first time I went to a meeting, I was terrified, because it felt like Top Gun — the best of the best come here, you’re going to get challenged. I had all these preconceived notions, some of them weren’t far off. You get challenged.

They were developing backplanes. XAUI is technically not a backplane solution, but that’s where a lot of the focus was being done. I saw a group that asked the hard questions, had the data come in, analyzed and discussed and debated that data, and made decisions and moved on. That’s a lot of what I continue to see today.

So find a problem, figure out the problem. People want to get involved because they want the Ethernet brand on it. The problem gets solved. Repeat. I have seen this happen now, multiple times. I think it’s a testimony to Ethernet in general, that you have people who want to come here and do that sort of thing. For whatever it is, right? When I came in, there wasn’t a backplane spec, XAUI wasn’t a backplane spec. I went away for a year and a half and got a call from somebody when we started the blade server project that later became IEEE 802.3ap. At that point, it was time to come home. That was really a great way to phrase it, because I really had embraced the whole Ethernet mentality.

I’ve seen that repeating mindset, and I think that’s a key point. I hope you’re not just wanting technical stuff, because I think there’s so much more to IEEE than just the technical aspect. There’s a whole marketing thing to it. There are the people who work there, those who over time help pass it on. We’ve been mentored by the people who came before us, and we’re mentoring people who will come after us.

I’ve seen electrical backplane interfaces come into the Ethernet sphere. After that, it was blade servers and backplanes came in. That’s when I started my journey down higher speeds. I mean, I just said this today, somebody said, “I’ve taken Ethernet in my task force from 10 Gbit/s to 1.6 Tbit/s.”

The whole speed thing is definitely one of the hallmarks of my journey with Ethernet. But it’s been so much more than that. We’ve identified trends and things that needed to be fixed. We’ve identified new areas. For example, I’m bringing coherent optics into Ethernet. I’ve helped the plastic optical fiber people during one of their CFI to get in.

We’ve gone from, you know, first it was a Gig, and then we jumped to 10 Gig. Then we had the whole rate debate, if you remember that in the last decade where, oh, let’s do 10. I forget the whole hierarchy. We were at 10, then we introduced 40 and 100. Then people wanted 25, then 50, then 2 and a half and 5. It was madness. We called it ‘the great rate debate.’

That was a big deal. Right? For two years, it drove so much industry conversation. It seemed like that’s all we talked about, the various rates.

One of the things I was very happy with, I’m sure you remember the whole Ethernet mantra of “10 times the power or 10 times the speed at three times the cost.”

EEWorld: Yes.

D’Ambrosia: That’s a great marketing saying, something that’s catchy and memorable. I feel like I helped contribute to people recognizing that it’s Ethernet, and Ethernet wins when it solves the problem that’s being presented to it, not by trying to force the problem to adopt the Ethernet solutions available to it.

This is where you see 2.5 Gig and 5 Gig come in. Ethernet’s evolution has been continuous. That 10x leap that I started off with back in 2002 is now very, very different. I don’t even know how to characterize any more other than follow the series or follow the lane rates. That whole progression has changed.

Another change as well, when I started the 802.3ba project that came with 40 Gig and 100 Gig, we were doing two speeds. Because of Ethernet’s multi-lane structure, will introduce solutions for four speeds at the same time. What used to take multiple years and projects to bring out a whole ecosystem now happens all at once. That’s just mind boggling to me to watch. Because I’ve seen historically how it happened. Oh, we develop 50 Gig signaling for 400 Gig. Oh, let’s go off and do 50 Gig Ethernet now. Now it’s like, oh, we’re developing 200 Gig signaling. Okay, let’s do 200G, 400G, 800G and 1.6Tbps

It allows the entire ecosystem to move together now. Before we’d build out this out and that. When I first came in, I felt like I was chasing my tail, because, the chips are faster. Oh, now the IOs are faster. And now we need a bigger, faster backplane. It just seemed like you went from one problem to the next. As opposed to this whole approach where we’ll give you all this at one time. We’re doing it much faster.

EEWorld: The multi-lane that you mentioned, I understand the concept that you have, usually you could be 4 could be 8 could be 10. Maybe there’s more.

D’Ambrosia: Yes, we’re binary. We’re binary in Ethernet. That is one thing that I was just laughing at as you were saying that because it’s 1, 2, 4 and 8, that’s where we’re at now.

The 10 was not very good for us. We did 10 (lanes) by 10G, 10 for 100. That didn’t work out too well. The whole muxing didn’t work out very well either. There were a lot of issues with that. We’re on a binary path now. I think the next thing you’ll see and it’s just a matter of time is when does 16 lanes become desirable, and something that the market actually wants and will use, and I think it’s going to be sooner rather than later.

EEWorld: It seems to me that’s the limiting factor, because you can go to some number of lanes. The other way you get speed is you get overall aggregate speed by increasing the speed of the individual lanes. I’ve been following the people at Design Con, for example. That’s what they do. They’re doing it all at the electrical level. Everything that they do is driven by what you can do in optics. If you go to OFC, whatever you hear at OFC becomes next year’s problem at DesignCon.

D’Ambrosia: It’s a little bit more than that. First of all you have to worry about your bitrate and your baud rate. Baud rate, how fast am I going? Bitrate? How many bits am I transferring? How many bits per baud am I transferring? So you’ve got the signaling, but then you also have to worry about the modulation. So you’ve got those things. Then if you look at it electrically, as far as I know, with the exception of Base T, you’re driving the same pair in both directions. I’m not aware of any sort of lambda approach, if you will, to electrical signaling. I think there’s some discrete multitone (DMT) modulation and stuff that they do but when you look at it electrically for the most part, it’s a single pair carrying a single electrical signal, if you will. Whereas optics, you have parallel, you have duplex fiber, and then you also have multiple lambdas on fiber.

One of the things that has become a real naming headache for us is, what are we going to do when we get to the point where we have multiple lambdas on parallel fiber solutions? Because we haven’t really gone too much there yet. I don’t believe maybe with the 400 Gigs, I’d have to go look at that in a little more detail. But that’s coming. Because people like this fiber for structure, it’s going out in a lot of places.

You’ll see that, so when you look at the parallelisation and the aggregate, you have to look at what medium you are talking about to go on, and then what you were saying, how it all goes together.

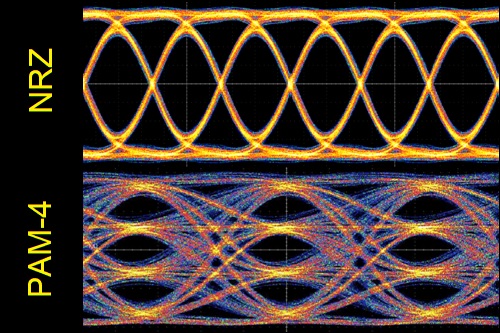

EEWorld: Now on the electrical side, the big change in modulation was going from NRZ to PAM-4. I remember maybe 10 years ago, when everybody said, ‘PAM-4 is coming.’ And you had a school of people who said ‘We’re going to get the next speed.’ Whether it’s the doubling of the rate (it’s usually a doubling) when they went from 10 to 25 to 50 to 100G. Now, it’s 200G. They refer to it as 224 because of the overhead. The NRZ people were saying we’re going to find a way to tweak this and get another 2x increase in speed because PAM-4 has its own problems. You don’t hear that anymore.

D’Ambrosia: Do you want to know when the whole PAM-4 stuff was actually going around?

EEWorld: Sure.

D’Ambrosia: In 2005 I think, there was a little group called the High Speed backplane initiative. I was secretary for that group. That group was trying to make 10 Gig with PAM-4.

EEWorld: Interesting.

D’Ambrosia: So do you remember the company accelerating networks? I was doing 10 Gig on some of my old backplanes with PAM-4 back in 2000. Actually, it was in my old house, so this was pre-2005.

EEWorld: Well, we started hearing about PAM-4 in the DesignCon world in the mid 2010s or so, but in terms of the speed the jump was from 25 Gig to 50 Gig. Some people managed to get that speed with NRZ. Beyond that, the NRZ people finally realized that it had run out of gas, there wasn’t enough bandwidth in the connections of the copper and so on, that we had to double the data rate without doubling the bandwidth. When they went to PAM-4 people talked about going beyond that, there were some demonstrations of PAM-8, but that hasn’t happened yet.

D’Ambrosia: We’ll get to that in a second. I have to take back when I said. Forgive me because this is actually from 2003. So it’s 20 years ago. This is dated January 28, 2003. At the time we were actually trying to develop PAM-4 for 4.96 Gig to 6.375 Gig. That ultimately morphed into the Optical Internetworking Forum (OIF) and the common-electrical interface (CEI) project because at that point, we were trying to make legacy backplanes. There was a real push on making legacy backplanes go faster, and that was where it happened.

Around 2004, I was testing 10 Gig on my backplanes. I was running 10 Gig across their great chip with all of the onboard diagnostics that you could run the stuff. We were doing it clearly back then. At that point, there was another one, I think, called UXPi (Unified 10-Gbit/sec Physical-layer Initiative). They were pushing to do 10 Gig serial with NRZ. When we got to 25 Gig, the backplane project (802.3bj), we actually wound up developing 25 Gig with both NRZ and PAM-4.

With four amplitude levels, PAM-4 modulation sends two bits per symbol as opposed to NRZ’s one bit per symbol without an increase in bandwidth.

Although NRZ won, developing the PAM-4 spec was a very good learning tool for IEEE. We started the 802.3bj project in 2010-2011. I think it was November of 2010 for the CFI. So that would have been in 2011. The race was on.

During the 802.3bs Project, we were developing 400 Gigs. So that would have been about 2015. There was a real push at that point to make the shift to PAM-4. It was a huge, huge industry decision. I remember the OIF at that point was debating it. We knew they were in the lead. There was a straw poll taken and I think there was significantly more support for PAM-4 than people thought at that time.

I don’t know if you’ve ever attended my meetings, but there’s a straw poll or motion that goes up and people say, ‘I didn’t realize that.’ They just would not know that everybody was thinking along the same line. That was around 2015 and we took off from there.

EEWorld: That sounds about right. When we really started hearing, PAM-4, and all the electrical problems that came with it, the smaller eye openings, and so on.

D’Ambrosia: That’s the problem with PAM-8, right? Because I remember the first time I started understanding that I started exploring PAM. At first I thought I could do PM 30. I didn’t fully understand what I was getting into at that point.

So, I don’t think PAM-8 is going anywhere. In my own current project, there was some debate early on about PAM-4 versus PAM-6. We didn’t move when we made the jump to 200 Gig to PAM-6, we’re all in a PAM-4 world at this point for 200 Gig as well.

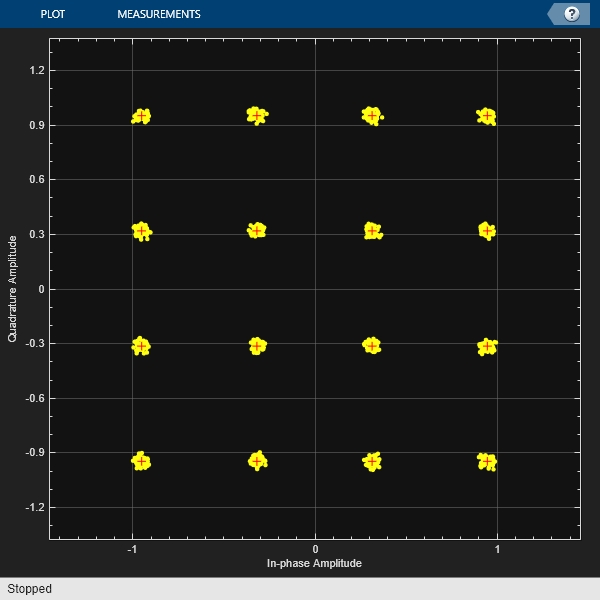

With the exception the coherence signaling in the in the QAM. We’ll be doing 16QAM for the longer reach optics. New things come along, new technologies come along, we figure it out and decide, we move on. Step and repeat.

Quadrature amplitude modulation uses combination of amplitude and phase to send signals. This 16QAM signal has four amplitudes and four phases.

EEWorld: You mentioned 1.6 terabit. That I’m assuming would be 8 by 200?

D’Ambrosia: For all of the electrical interfaces and direct-detect optics, it is 8 by 200. I’m choosing those words carefully. We don’t have a 1.6 coherent objective yet, but there has been talk about that. I know other groups are looking at that as well.

EEWorld: Ethernet cables have had to evolve to get to the next speed. As you said the modulations have to evolve, and at least on the wired side, and the copper side, the cables have to evolve to keep up. Do you get involved in that at all?

D’Ambrosia: So when you say “involved,” that’s a loaded question. I’m not designing cables anymore, but it does show up in my groups. First of all, you hear about improvements. I remember at one point, they were talking about how the wraps on the cables can actually contribute to the suck outs in the S21s. It wasn’t just that, for the easiest one is you have to connect a cable to something, right? So, you worry about the stubs on the solder joints that get connected. But that has an impact on it — you worry about the crosstalk.

Now there’s more evolution in terms of ‘Oh, what about the common mode aspects of this? What about the skew?’ So we always are seeing the questions come up. The improvements, people bring their product in the show, ‘Yeah, we can do this, this is feasible.’

We also have explored things with the FR4. I don’t remember Martin, when you and I, when you and I first met, but I used to spend a lot of my time on backplanes. And I got involved in so many different aspects of this. I can tell you, I remember sitting at home with my wife on a Friday night, plotting S21s while watching movies with her. I lived and breathed that stuff.

We got into the whole environmental aspects with FR4. Where moisture and heat could really screw up the capabilities of it. That later got fixed. So yeah, it’s a constant improvement. That’s what I meant by constant questioning and improvement. I just get amazed by how the technology has progressed and evolved. I mean, there’s stuff that I remember when we were doing the 10 Gig project, one individual saying, ‘Oh, the max power that you can get in a QSFP is three and a half Watts and went on and on. That was kind of like stamped into my brain. Look what happened with that, right? I think there are now 30 or 40 Watts, some ridiculous number. With that, and that was technology evolution. So that always happens. Obviously, power becomes a big deal with a lot of the stuff that we do, going faster doesn’t come free.

EEWorld: Where do things stand in 2024? What is next; what are the next steps that are coming in the short term?

D’Ambrosia: From an Ethernet perspective we have seen is that with Ethernet interfaces, the sweet spot used to be 1 lane or 4 lanes, right? So you start off with four lanes. XAUI was 4 by 3 and an eighth, 40 Gig was 4 by 10. 100 Gig took off when it was 4 by 25. That usually winds up being your initial networking solution, and then it would go serial. Now we’re seeing a lot of different things. You’ve got 1 lane, 2 lane, 4 lane and 8 lane. As I said, I won’t be surprised when we go to 16 lanes because some of that’s already been talked about, with the photonic with the silicon photonics. Because they can do x16 fiber, no problem there. I think that’s only a matter of time where the interface with that people will use, and by interface with them also including how many optical fibers in parallel, that will continue to get bigger, because going faster is getting harder.

So we will see in Hopefully in the first quarter 2024, the 800 Gig standard based on 8 by 100, should be completed and ratified. We’re in the final stages of balloting on that right now. I have meetings to talk about that.

At the same time, we are now seeing the hard work on the 200 Gig signaling starting to begin and by begin, understand, I’m talking about from a standards perspective with writing a standard. I think there’s a difference between technology that one can show in the lab and technology that multiple vendors can develop and then it interoperates together. I’m a standards guy, so of course I’m going to say that.

Right now, we’re looking to get draft 1.0 out in the March meeting. Start a taskforce review out of March. Then the hard work begins. It really does help having something on paper to start throwing stones at. To me, that’s my immediate short term, right, getting through all of that stuff.

Hopefully, we’ll have the chip-to-module electrical interface. But there’s also the chip-to-chip electrical interface, there are the copper cables and the backplanes. Seeing all this running at 200 Gig and getting that into standard. I think that there are hard questions we have to ask, for we don’t have our baselines yet. So that’s my immediate time frame at this point.

Then, you see the next two-and-a-half years of people coming up with the solutions. We do the standard, we raised the questions but who knows what problems we will find? We don’t know what we don’t know, right?

EE World: What’s the most difficult part of holding these standards meetings?

D’Ambrosia: Different people have different definitions for the same word. At one point, we needed a graphics to show the differences.

Tell Us What You Think!